research

In today's world, digital technologies are increasingly mediated by artificial intelligence systems whose inner workings are complex and often opaque, raising important questions about their societal impact. Our group brings together strong computational and engineering expertise to study the safety and integrity of online ecosystems. Our work combines scalable computational methods with causal experimental designs to audit algorithmic systems, assess the safety of information exposure across digital platforms, and evaluate the risks posed by large language models—from adversarial vulnerabilities to persuasive capabilities. By studying the complex interactions between algorithms and human behavior, we aim to understand how digital technologies shape information exposure, public discourse, and societal outcomes.

Studies of Large Language Models

Investigating the safety, behavior, and societal impact of large language models through red teaming, auditing, and experimental evaluation.

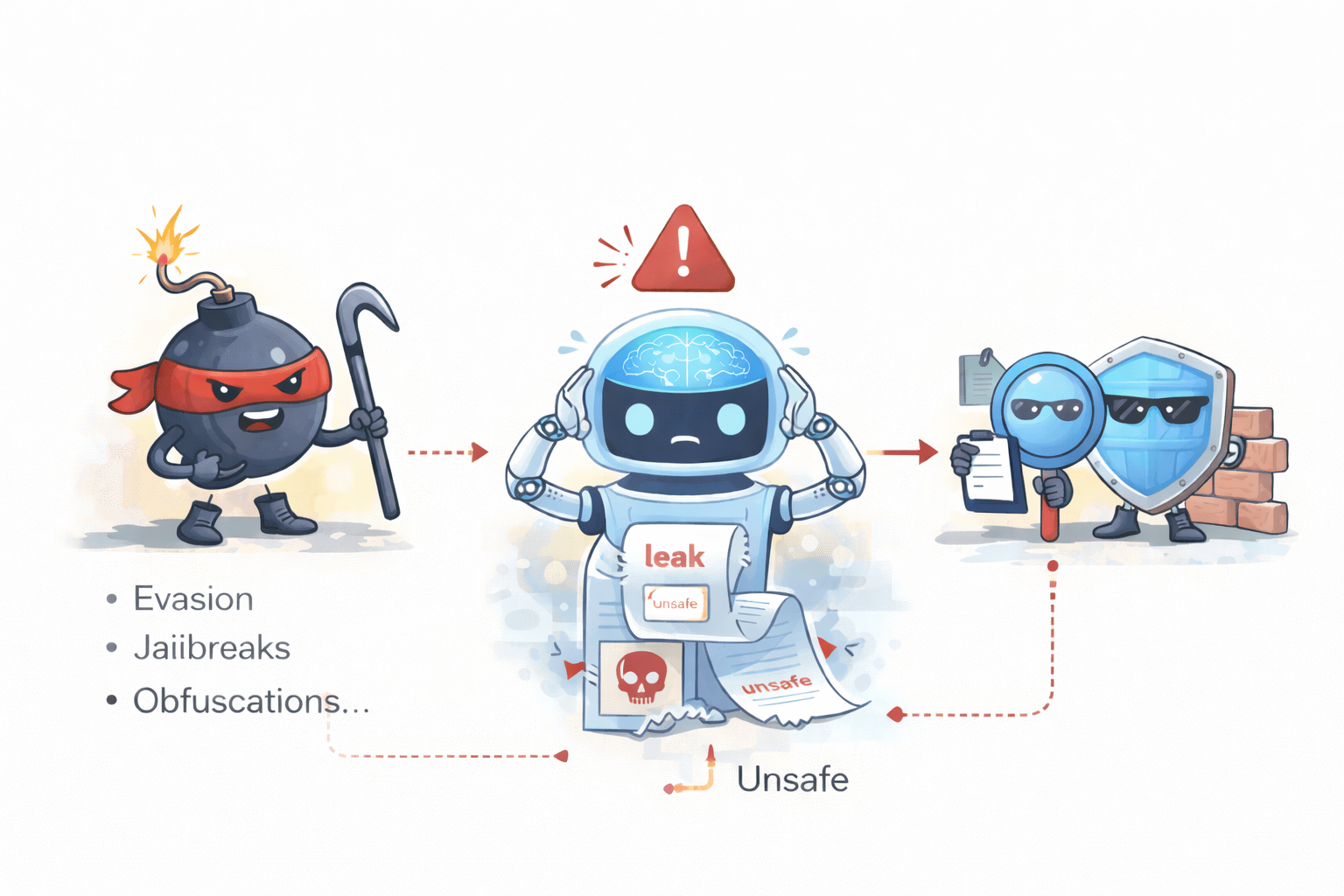

LLM Red Teaming & Safety

Adversarial testing and safety evaluation of large language models

Using Therapeutic Interactions to Improve General-Purpose LLM Safety

Adversarial Red Teaming for Multi-Agent Systems

Agentic AI Persuasions

Understanding AI agents' persuasive capabilities in realistic social settings

Persuasion in the Wild

The Dynamics of Bias in Multi-Agent Decision Systems

LLM-Mediated Information Systems

How large language models reshape information exposure and consumption

Systematic Audit of LLM Summarization

Safeguarding Personalization by LLM-Assisted Reranking

Studies of Sociotechnical Systems

Examining how algorithmic systems, human behavior, and platform design interact to shape online experiences and societal outcomes.

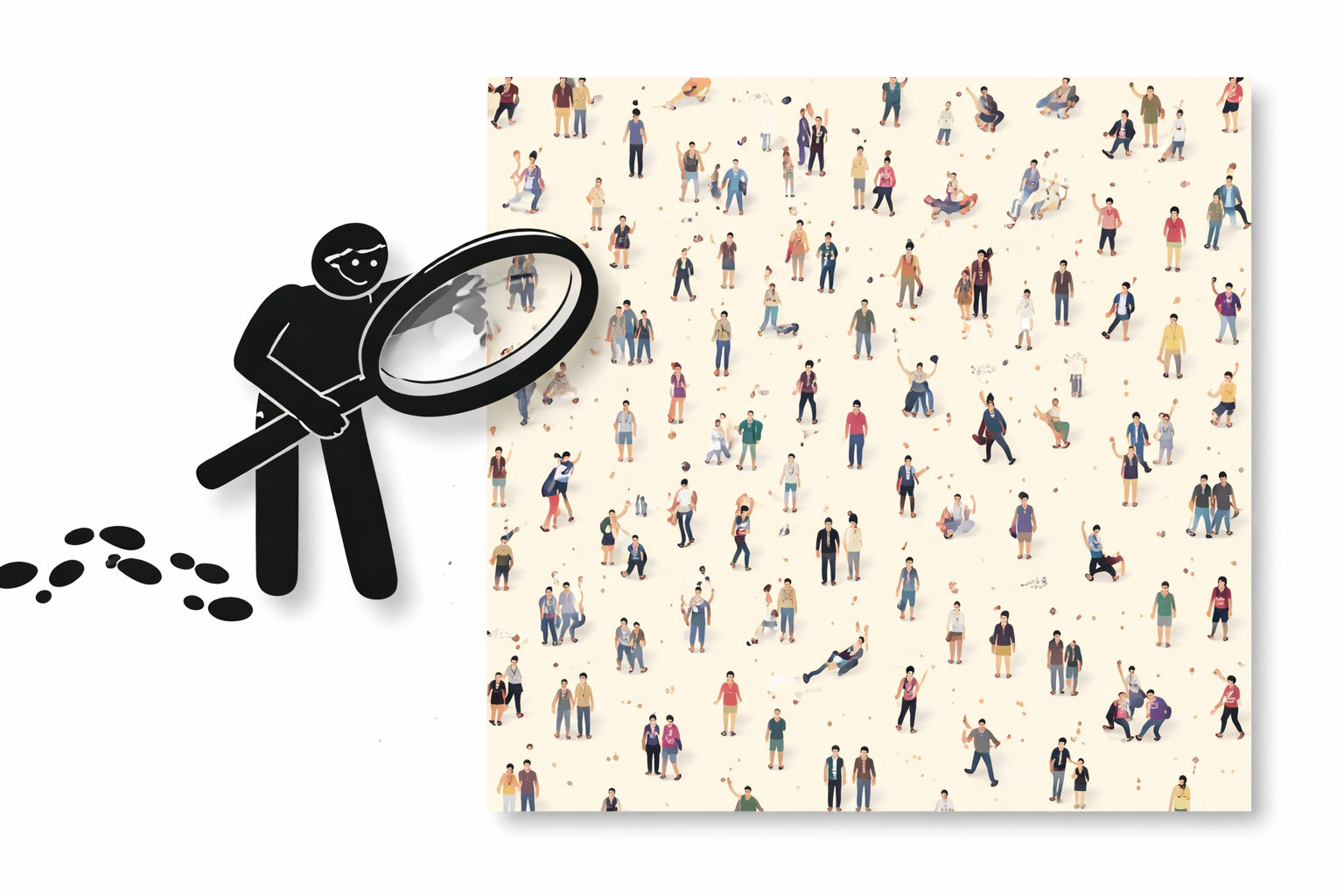

Prevalence of Problematic Content

Comprehensive evaluation of AI systems in real-world deployment contexts

Audit of Young Adults' Exposure in the Look for Sexual Content

Publications

- Hosseinmardi, H., Ghasemian, A., Clauset, A., Mobius, M., Rothschild, D. M., & Watts, D. J. (2021). Examining the consumption of radical content on YouTube. Proceedings of the National Academy of Sciences, 118(32).

- Horta Ribeiro, M., Hosseinmardi, H., West, R., & Watts, D. J. (2023). Deplatforming did not decrease Parler users' activity on fringe social media. PNAS nexus, 2(3).

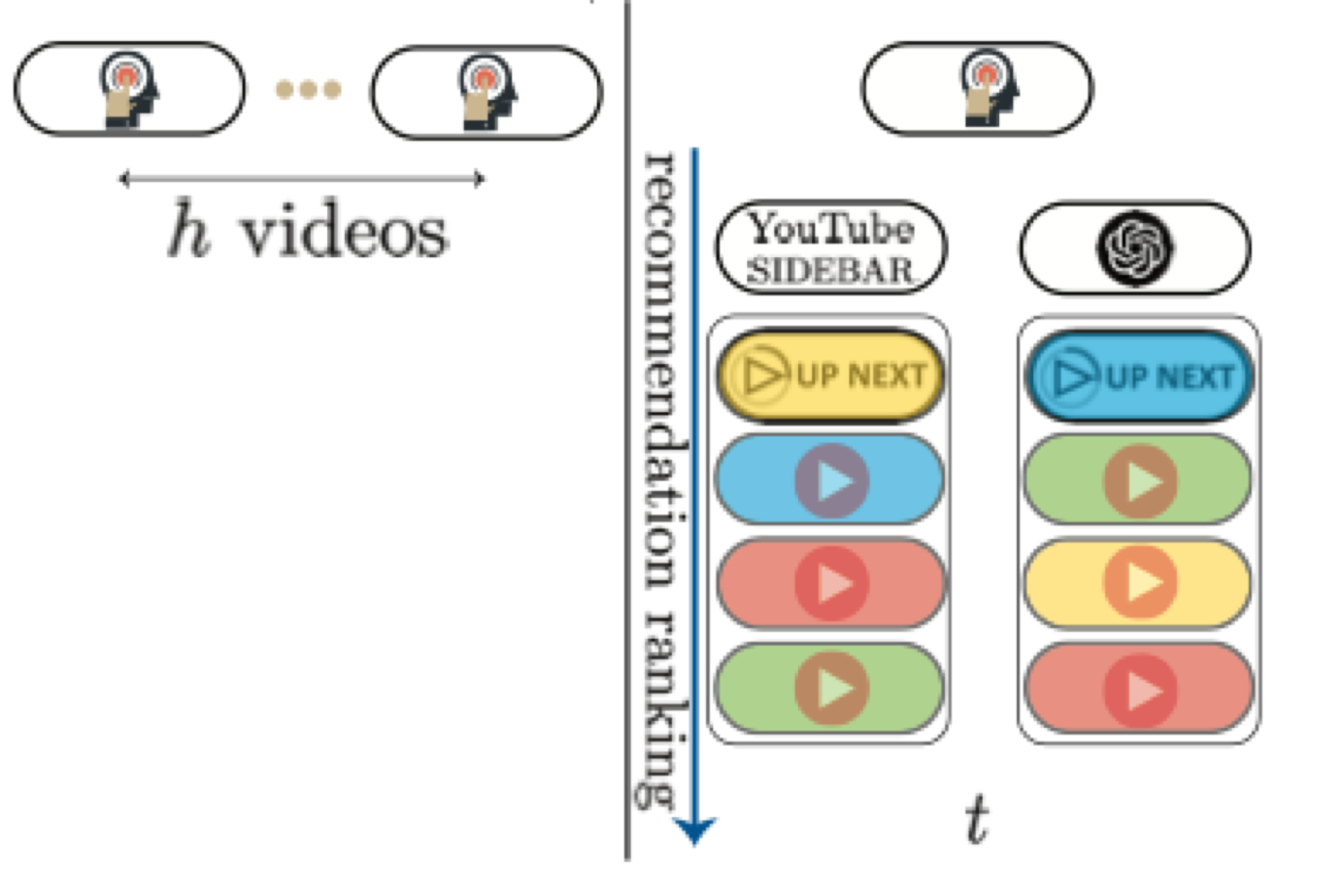

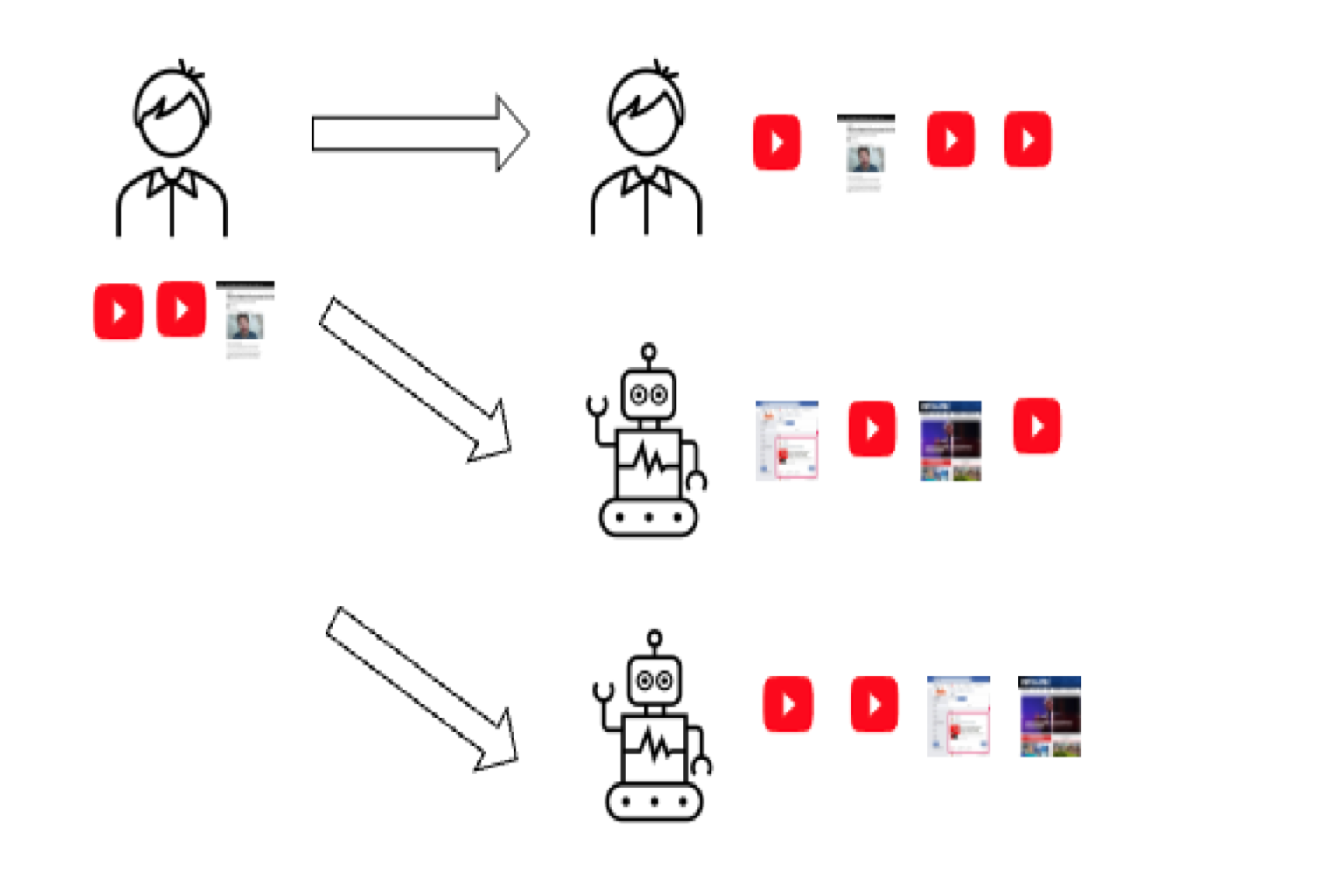

Counterfactual Experiment Design

Causal inference frameworks for auditing algorithmic systems

Publications

- Hosseinmardi, H., Ghasemian, A., Rivera-Lanas, M., Horta Ribeiro, M., West, R., & Watts, D. J. (2024). Causally estimating the effect of YouTube's recommender system using counterfactual bots. Proceedings of the National Academy of Sciences, 121(8).

Studies of Information Ecosystems

Understanding how information flows, fragments, and influences society through traditional and digital media platforms.

Our Shared Reality

Information ecosystem - mainstream

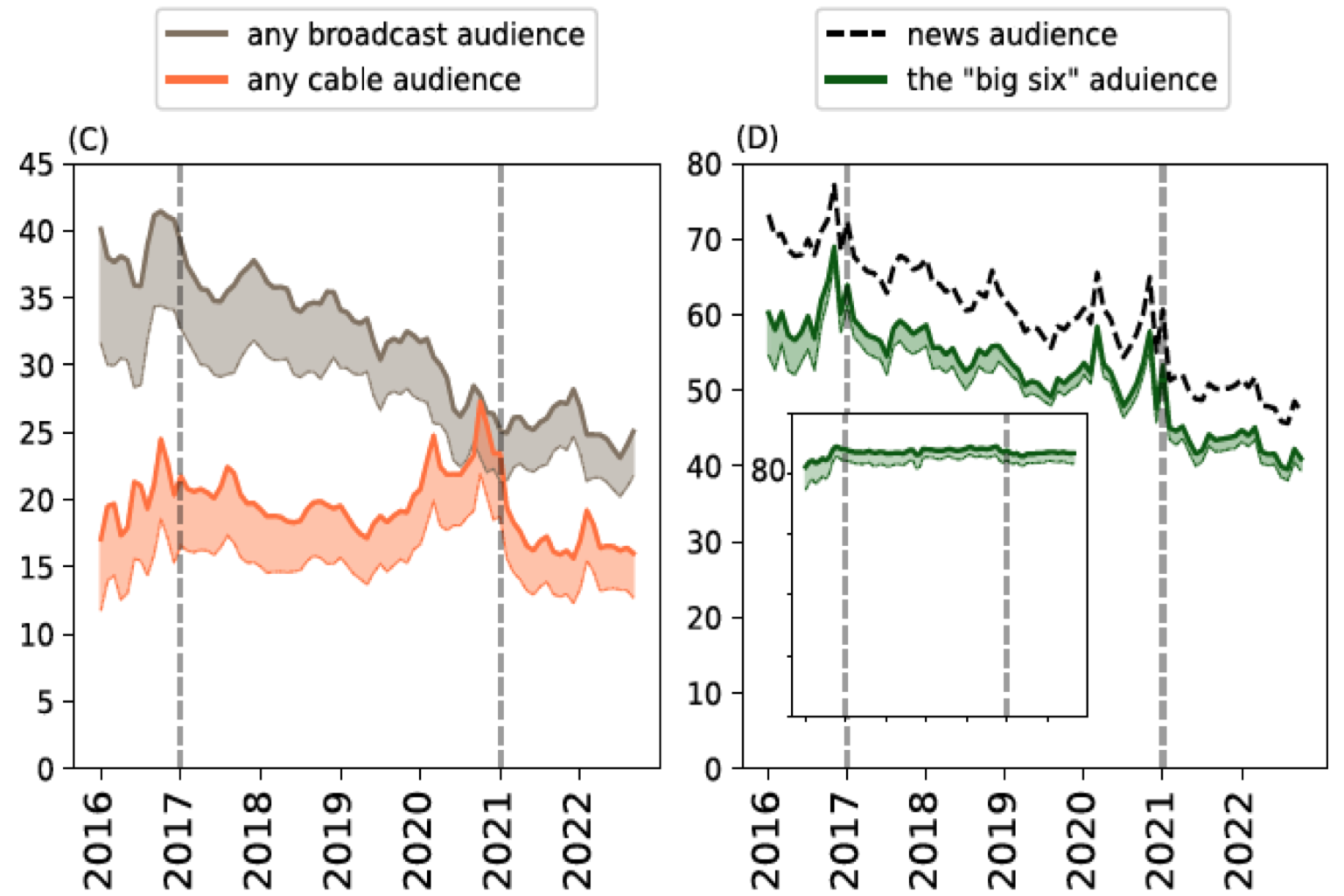

Media Fragmentation Analysis

Cross-platform information consumption patterns

- Muise, D., Hosseinmardi, H., Howland, B., Mobius, M., Rothschild, D., & Watts, D. J. (2022). Quantifying partisan news diets in Web and TV audiences. Science Advances, 8(28).

Computational Methods

Developing novel computational and statistical methods for studying complex sociotechnical systems.

Statistical Inference on Networks

Developing robust measurement frameworks for online discourse

- Ghasemian, A., Hosseinmardi, H., Galstyan, A., Airoldi, E. M., & Clauset, A. (2020). Stacking models for nearly optimal link prediction in complex networks. Proceedings of the National Academy of Sciences, 117(38).

- Ghasemian, A., Hosseinmardi, H., & Clauset, A. (2019). Evaluating overfit and underfit in models of network community structure. IEEE Transactions on Knowledge and Data Engineering, 32(9).

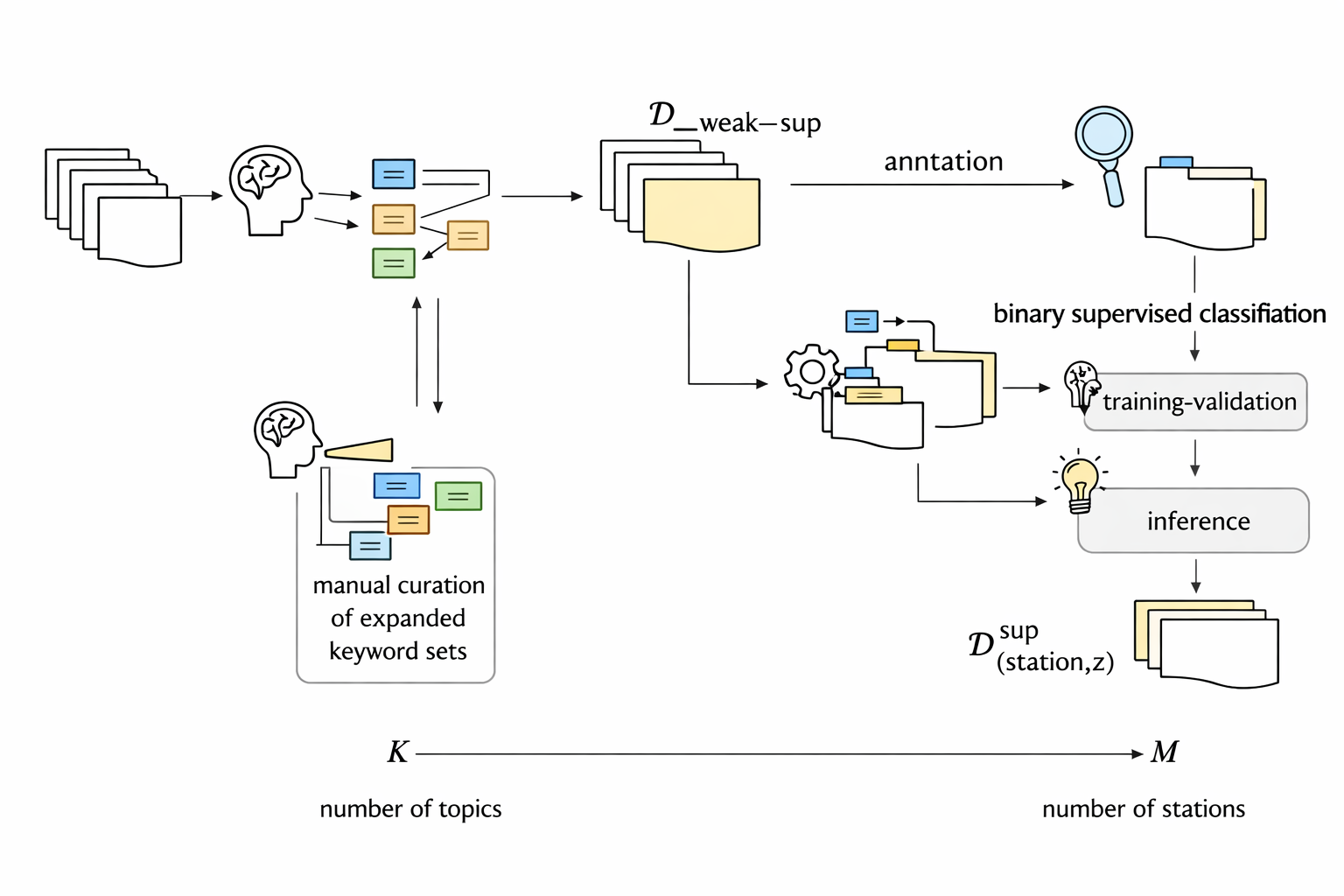

Open-World Detection and Inference at Scale

Detection and prediction methods for large-scale real-world data under minimal supervision